From Punch Cards to the Cloud

A VERY Short History of Software

The Rise of Mainframes and PCs

In the early days of computing roughly the 1950s through the 1970s—computers weren’t boxes on desks. They were mainframes: enormous machines that filled entire rooms and served entire institutions. They were expensive, noisy, and temperamental yet they were the height of progress.

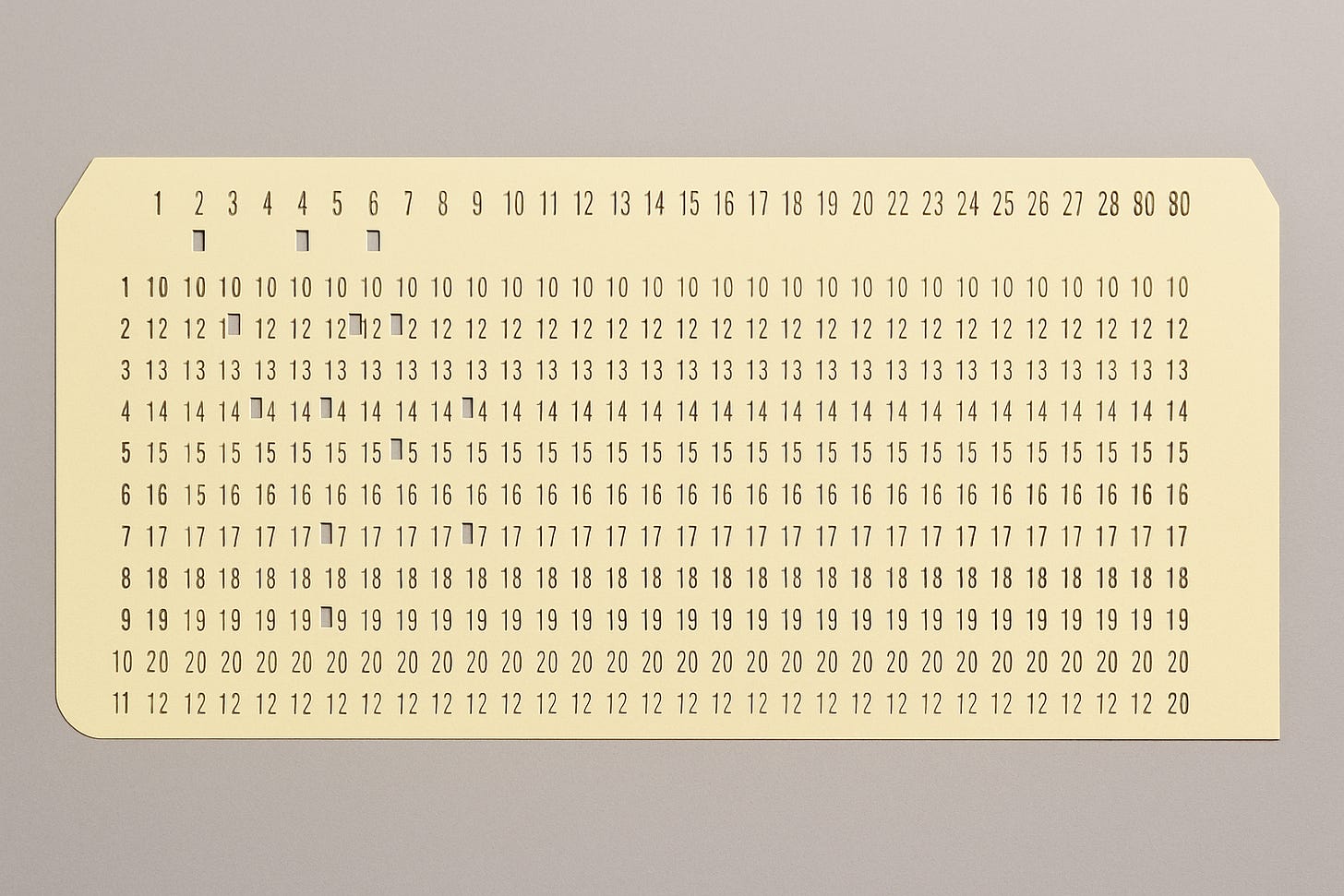

Mainframes didn’t run interactively like modern computers. Instead, they processed batch jobs. Programmers wrote code often in FORTRAN or COBOL, then punched it onto cards using keypunch machines. Each line of code became one stiff paper card, with holes representing instructions or data.

Once a program was ready, you’d submit your stack of cards to an operator, who would feed them into the reader. Hours later, a printer would deliver your results, on wide paper with alternating green and white strips, lovingly known as green-bar. If one card was missing or out of order, the entire run failed, and you had to start again.

My mother worked on systems like that at Cal Poly Pomona, and I remember constant chatter of the printers in her lab. And her boxes of punch cards. Sometimes I’d “help” by rearranging the cards into a better order or adding my own crayon art. You can guess how that turned out. Let’s just say her code didn’t appreciate my creativity.

As technology moved into the 1970s and 80s, those room-sized systems began to shrink. The personal computer revolution took off.

From Living-Room Computers to Interactive Coding

When the first personal computers appeared, they were astonishingly limited and astonishingly expensive. My father brought one home that connected to our television, it cost several hundred dollars (a serious investment at the time) and had just 4 kilobytes of RAM. Programs loaded slowly from cassette tapes, and storage was measured in kilobytes, not gigabytes.

Soon came machines like the Apple IIe, a marvel for its time with a built-in keyboard, floppy-disk drive, and a bright green-on-black display. You could actually see what you were doing.

Even the “games” were mostly text adventures: you’d type something like GO NORTH or PICK UP KEY, and the computer would print a short description of what happened next. Compared to today’s immersive worlds, it was primitive, but it was magic. For the first time, you could talk to the machine and get an immediate response.

It was also the beginning of a mindset every software engineer knows well: “It runs on my machine.” On those early computers, every setup was unique—the programs, the processor, the hard drive, and even the way users organized their files. People installed software wherever it seemed convenient, ignored installer suggestions, and renamed folders to whatever made sense to them. Some updated their operating systems while others never did, and there were countless versions floating around. Programs would update, and their libraries would start to conflict until nothing worked the same way twice. When someone else’s system refused to run the same code, all you could do was shrug and say the only thing that made sense: it works on mine.

The Internet Era to Cloud Computing

By the 1990s, floppy disks gave way to the Internet. Software no longer came in a box with a stack of install disks; it could be downloaded with a click. Files, email, and eventually entire applications began to live online instead of on your hard drive.

That shift, along with the ongoing frustration of “it runs on my computer,” led to the rise of cloud computing. Suddenly, we went from running everything locally on our own machines or a physical server you could point to, to relying on vast, distributed data centers that handled the heavy lifting behind the scenes.

“It runs on my computer” stopped being the problem, but new ones took its place. Getting all the infrastructure, networks, permissions, and security to work together was never as simple as it looked. Still, the trade-off was enormous. Anyone with an idea could now build something real.

The cloud lowered the barrier to entry. Startups no longer had to buy racks of expensive servers or hire full-time operations teams. You could spin up virtual machines, deploy an app, and have a global product running in minutes. And with serverless computing, you did not even have to manage the servers at all; you simply uploaded your code and let it run when needed.

That is how SaaS, Software as a Service, was born. Instead of selling disks or downloads, companies sold access. Businesses could subscribe to software that lived entirely online, updated automatically, and scaled as needed.

It is funny. We started with giant mainframes that only a handful of people could access, then moved to personal computers that anyone could own, and finally circled back to massive shared systems again. The difference now is that these machines are everywhere, invisible, and always on.

⚙️ You’ve already built something smart now let’s make it shine.

Building something with no-code or AI tools and got stuck? We help you troubleshoot, refine, and connect what you’ve started — clean, simple, and reliable.

👉 Learn more at Lucenra Solutions